Read full article about: ChatGPT gets tone controls: OpenAI adds new personalization options

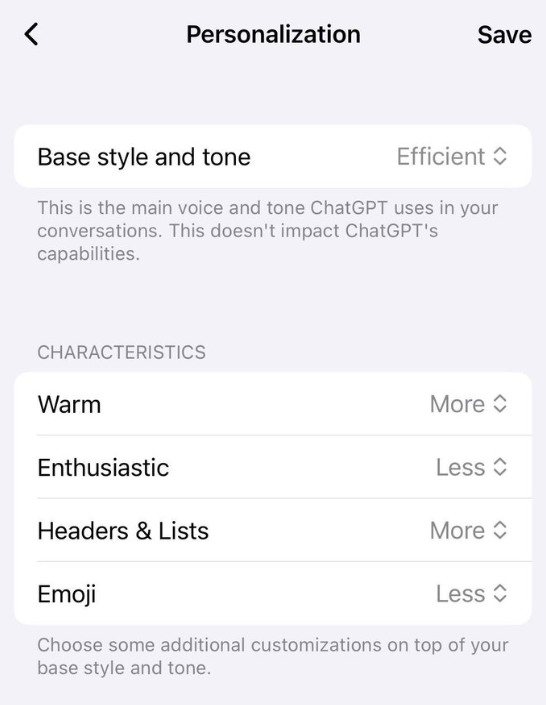

OpenAI now lets users customize how ChatGPT communicates. The new "Personalization" settings include options for adjusting warmth, enthusiasm, and formatting preferences like headings, lists, and emojis. Each setting can be toggled to "More" or "Less." Users can also pick a base style - like "efficient" for shorter, more direct responses.

OpenAI says these settings only affect the chatbot's tone and style, not its actual capabilities. The company notes that the new options likely work as an extension of the custom instructions feature available in the same settings window.

Google's open standard lets AI agents build user interfaces on the fly

Google’s new A2UI standard gives AI agents the ability to create graphical interfaces on the fly. Instead of just sending text, AIs can now generate forms, buttons, and other UI elements that blend right into any app.

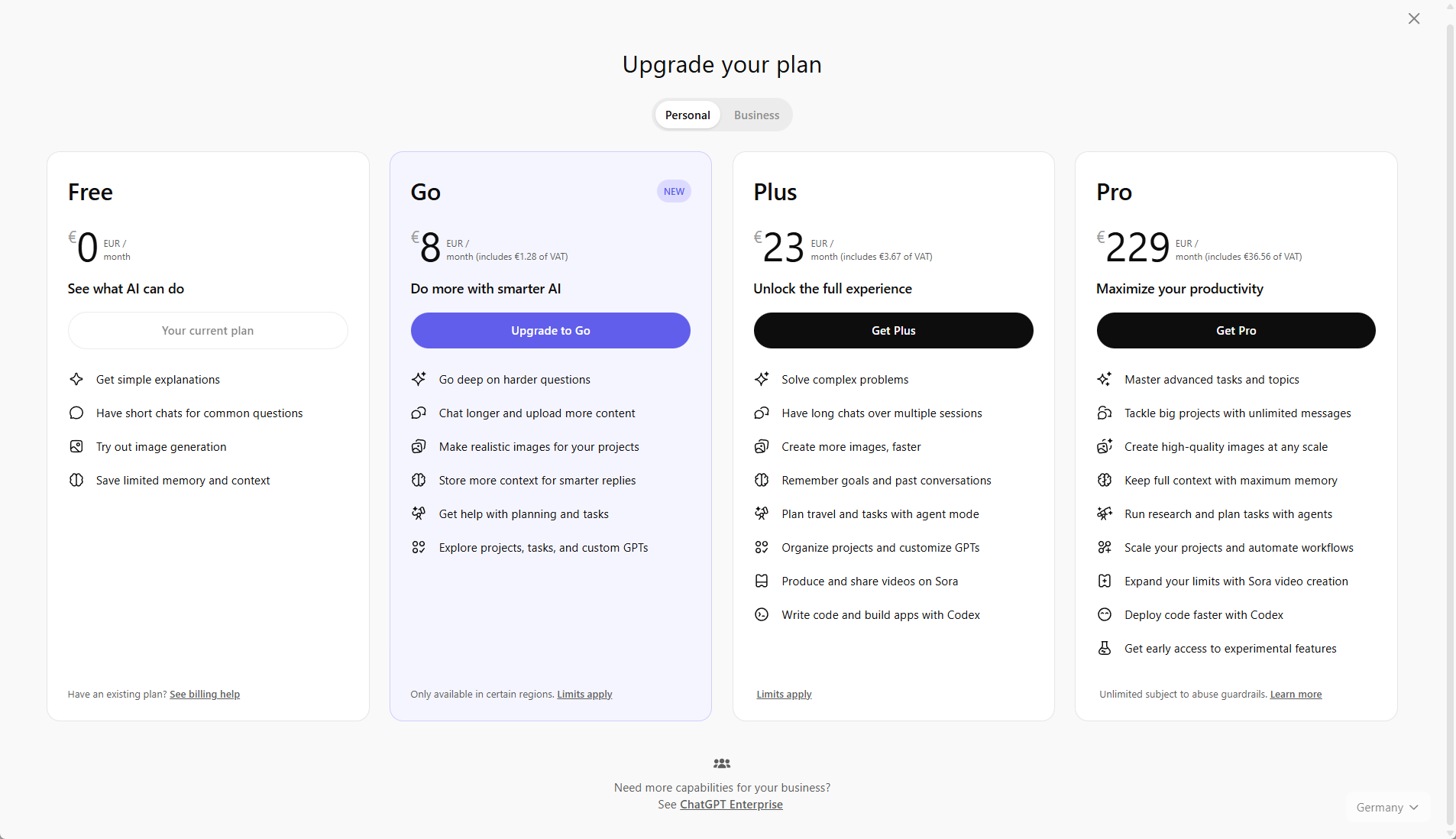

Read full article about: OpenAI brings cheaper subscription tier "Go" to more markets

OpenAI is significantly expanding the availability of ChatGPT Go, its budget-friendly subscription tier. Following a launch in India in August, the plan is now available in over 70 additional countries—including markets across Europe and South America—according to an updated support page. In Germany, the service costs 8 euros per month. Beyond extended access to the flagship model, the subscription adds capabilities for image generation, file analysis, and data evaluation, along with a larger context window for handling longer conversations. Users can also organize projects and build their own custom GPTs. However, the plan excludes access to Sora, the API, and older models like GPT-4o.

The broader rollout comes alongside a cost-saving adjustment to how the system handles queries. OpenAI recently removed the automatic model router for users on the free tier and the Go subscription. By default, the system now answers requests using the faster GPT-5.2 Instant. Users must manually switch to more powerful reasoning models when needed, as the automatic routing feature is now exclusive to the higher-priced plans.

Anthropic's AI store makes money while debating eternal transcendence

Anthropic’s autonomous kiosk is finally making money, but not without drama. In the second phase of Project Vend, stronger models, stricter processes, and an AI “CEO” turned losses into profits, while also exposing how easily AI agents can be manipulated, misunderstand authority, or ignore real‑world laws. The experiment shows that structure and guardrails matter more than raw intelligence when AI runs a business.

Read full article about: Meta preps "Mango" and "Avocado" AI models for 2026

Meta is developing new AI models for images, videos, and text under the codenames "Mango" and "Avocado." The release is planned for the first half of 2026, according to a report from the Wall Street Journal citing internal statements by Meta's head of AI Alexandr Wang. During an internal Q&A with Head of Product Chris Cox, Wang explained that "Mango" focuses on visual content, while the "Avocado" language model is designed to excel at programming tasks. Meta is also researching "world models" capable of visually capturing their environment.

The development follows a major restructuring where CEO Mark Zuckerberg personally recruited researchers from OpenAI to establish the "Meta Superintelligence Labs" under Wang's leadership. The market for image generation remains fiercely competitive. Google recently released Nano Banana Pro, an impressive model known for its precise prompt adherence, and OpenAI quickly followed up with GPT Image 1.5 a few weeks later. Most recently, Meta introduced the fourth generation of its Llama series in April and is currently collaborating with Midjourney and Black Forest Labs on the Vibes video feed.

Read full article about: Anthropic publishes Agent Skills as an open standard for AI platforms

Anthropic is releasing "Agent Skills" as an open standard at agentskills.io. The idea is to make these skills interoperable across different platforms, meaning a capability that works in Claude should function just as well in other AI systems. Anthropic likens this strategy to the Model Context Protocol (MCP) and notes that it is already working with ecosystem partners on the project. OpenAI already adopted skills.

The company is also upgrading how skills function within Claude. These skills are basically repeatable workflows that customize the AI assistant for specific jobs. Administrators on Team and Enterprise plans can now manage skills from a central hub and push them to every user in their organization, though individuals still have the option to turn them off.

Creating these skills has also gotten easier: users just describe what they need, and Claude helps configure it. Anthropic has also launched a directory of partner skills from companies like Notion, Canva, Figma, and Atlassian at claude.com/connectors. Developers can find technical documentation at platform.claude.com/docs, and skills are now live across Claude apps, Claude Code, and the developer API.

Comment

Source: Agentskills | Anthropic