AI search startup Perplexity has inked a $750 million contract with Microsoft to use its Azure cloud service. Bloomberg reports, citing people familiar with the matter, that the three-year deal gives Perplexity access to various AI models through Microsoft's Foundry program, including systems from OpenAI, Anthropic, and xAI.

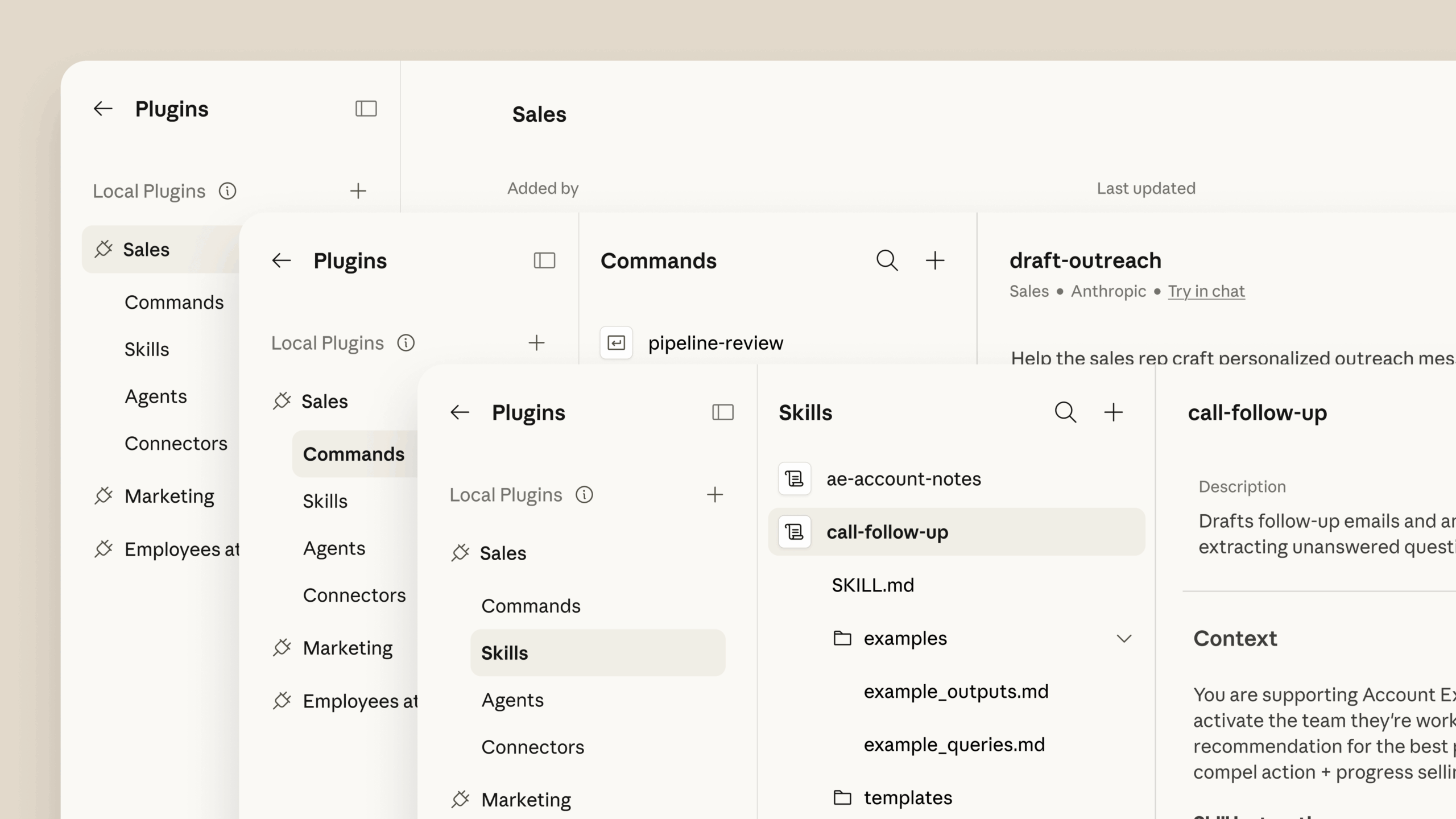

A Microsoft spokesperson confirmed to Reuters that Perplexity has chosen Microsoft Foundry as its primary platform for AI models, with a Perplexity spokesperson telling Bloomberg the partnership provides access to leading models from X, OpenAI, and Anthropic.

Amazon Web Services remains the startup's main cloud provider, but last year may have strained that relationship: AWS parent company Amazon sued Perplexity over a shopping feature that automatically places orders for users.